A Quantitative Analysis of Point Clouds from Automotive Lidars Exposed to Artificial Rain and Fog

Abstract

1. Introduction

1.1. Autonomous Driving in Degraded Visibility Environments (DVE)

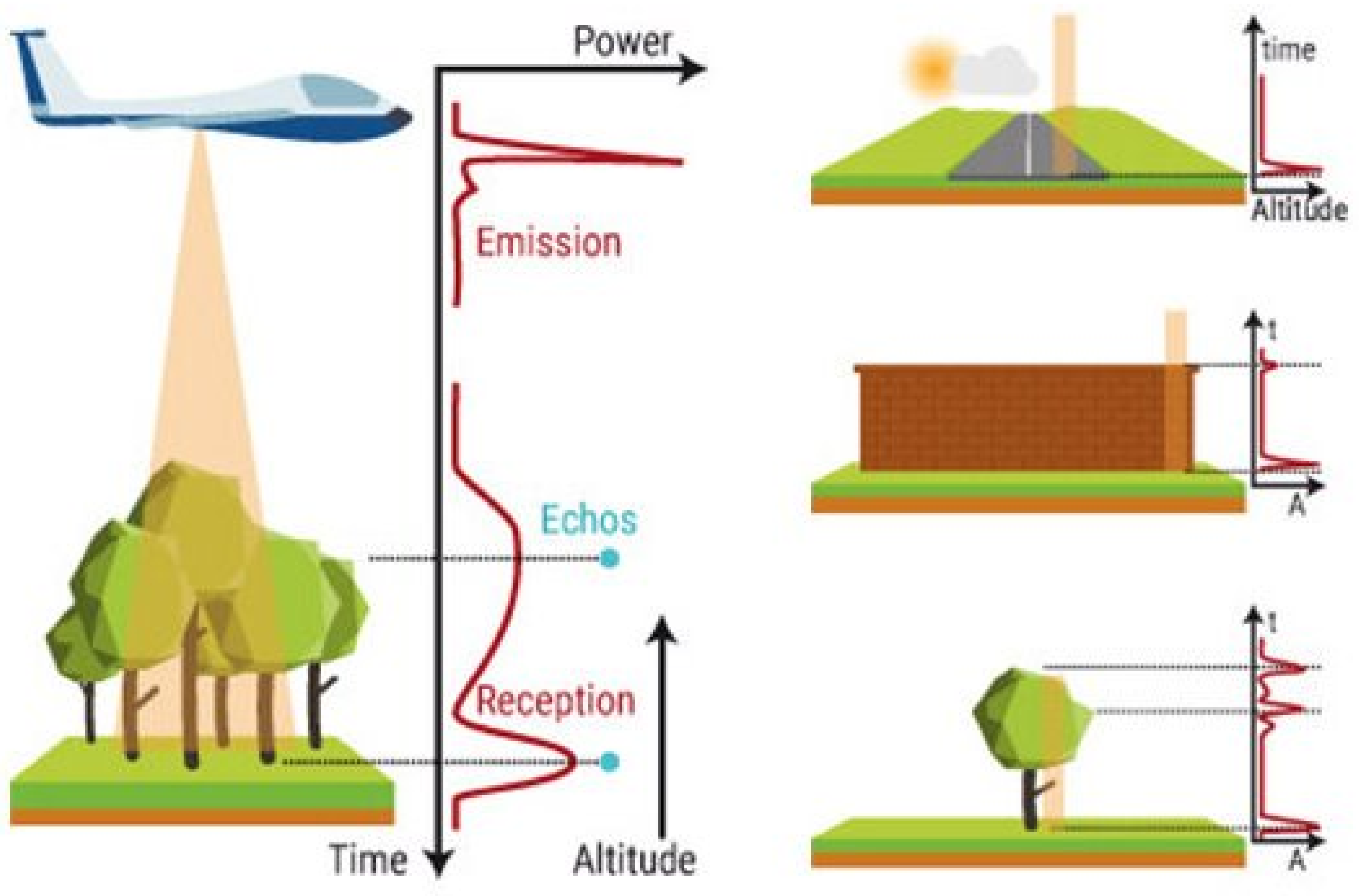

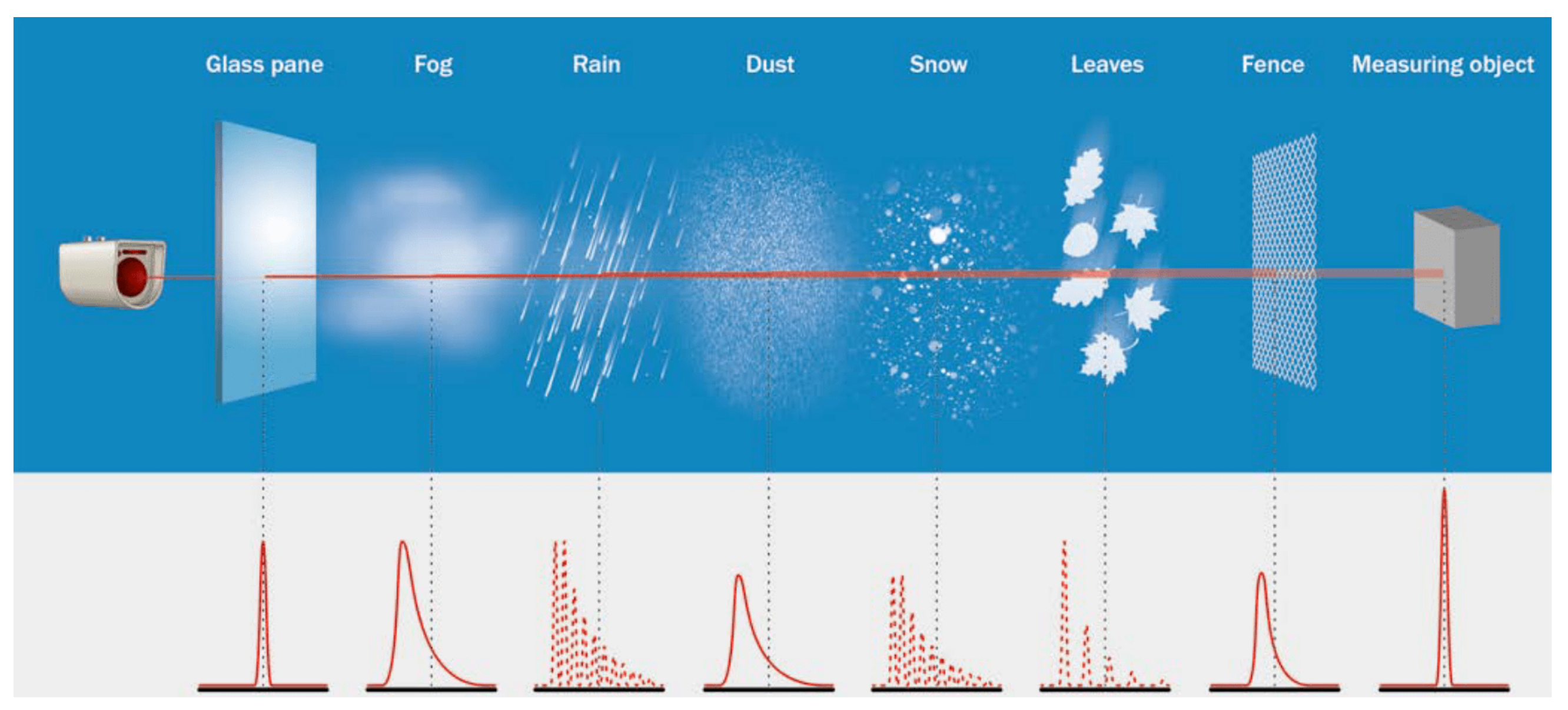

1.2. Lidar Signal

1.3. Contribution and Outline

2. Lidar Sensors in DVE

3. Materials

3.1. Generating Artificial and Controlled Weather Conditions

3.2. Scene Description

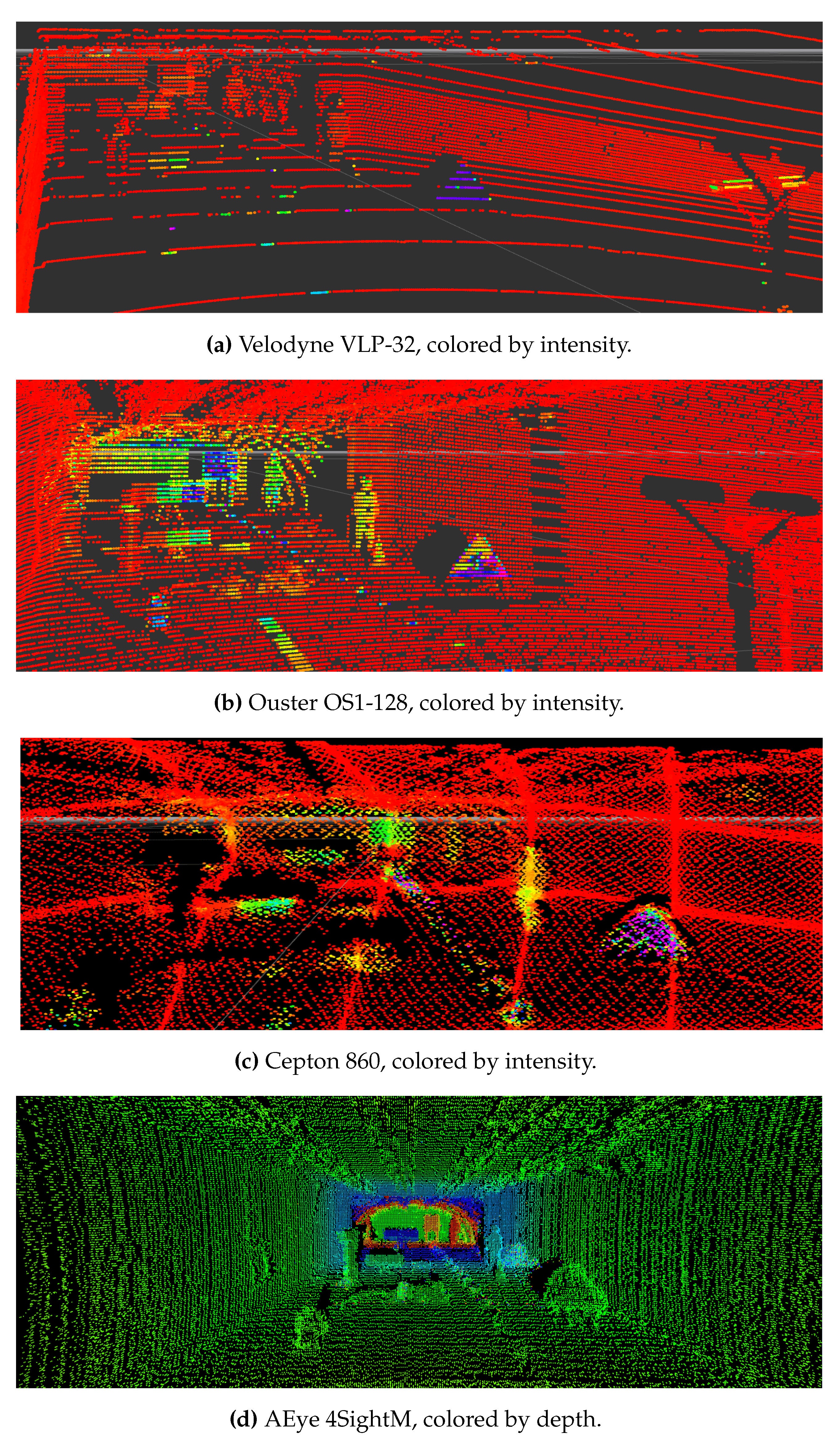

3.3. Lidar Sensors

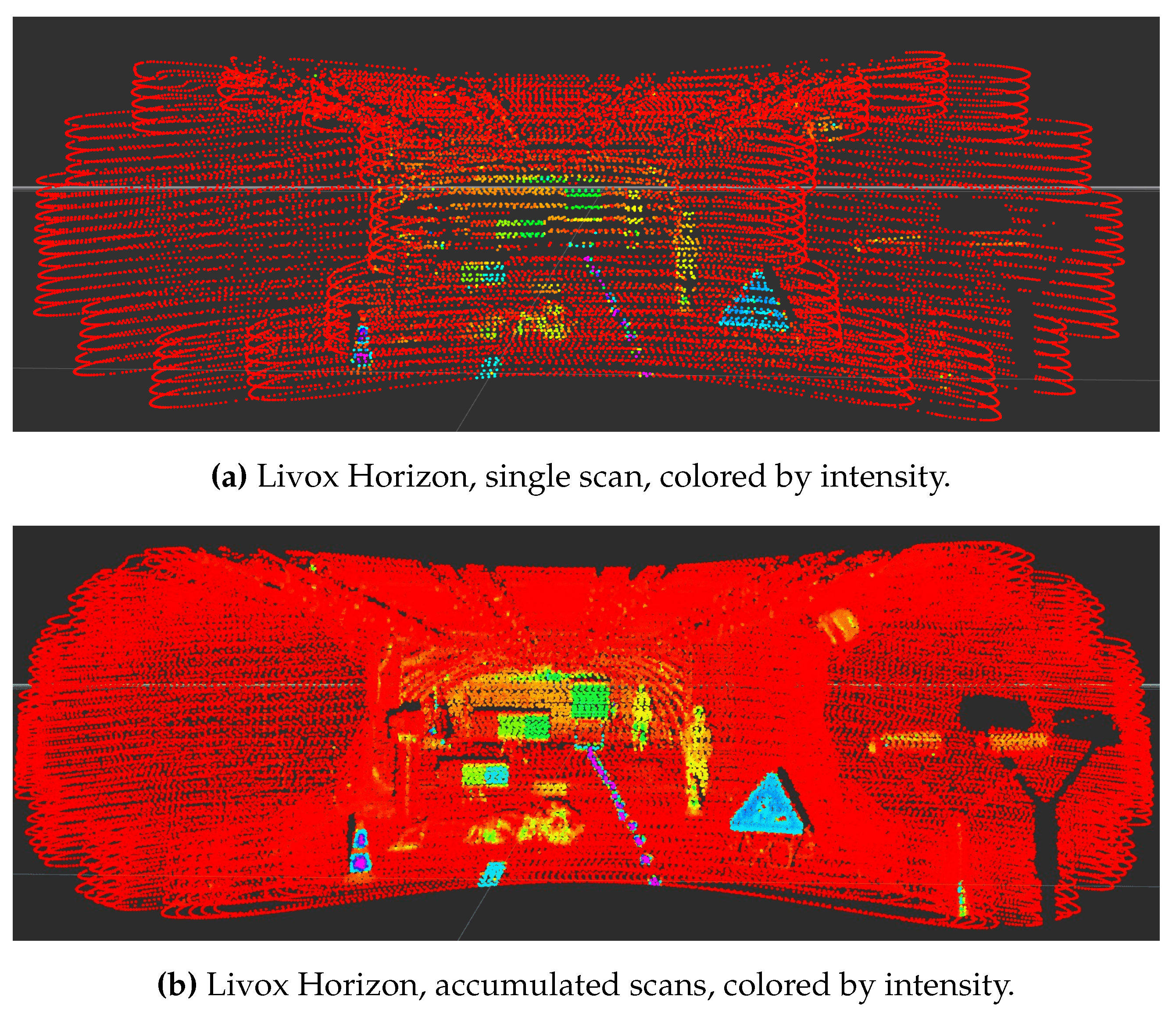

- Livox Horizon [23]

- Velodyne VLP-32 [28]

- Ouster 128 [4]

- Cepton 860 [29]

- AEye 4SightM [30]

3.4. Weather Sensors and DVE Control

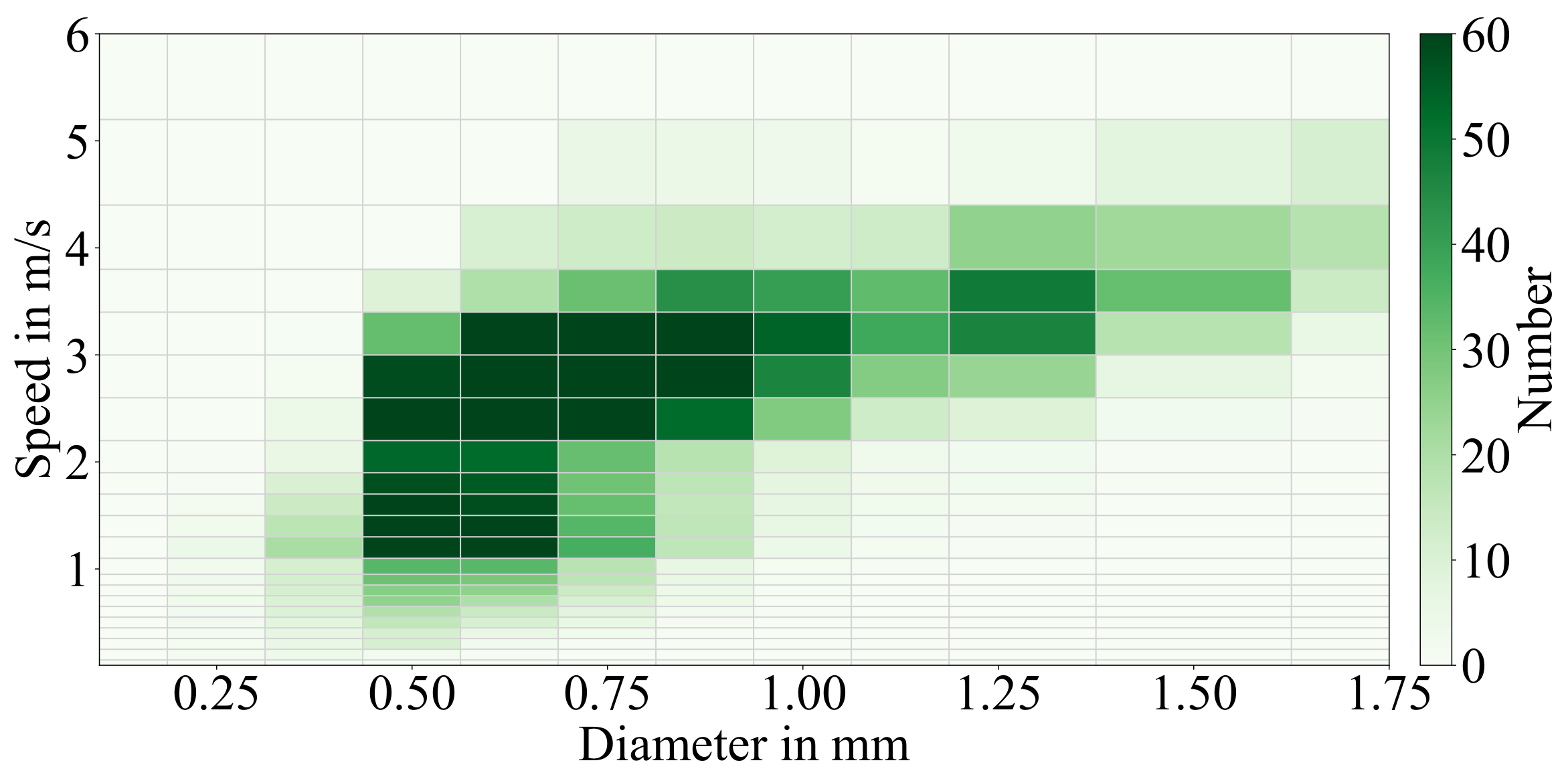

- Parsivel OTT Disdrometer [32]

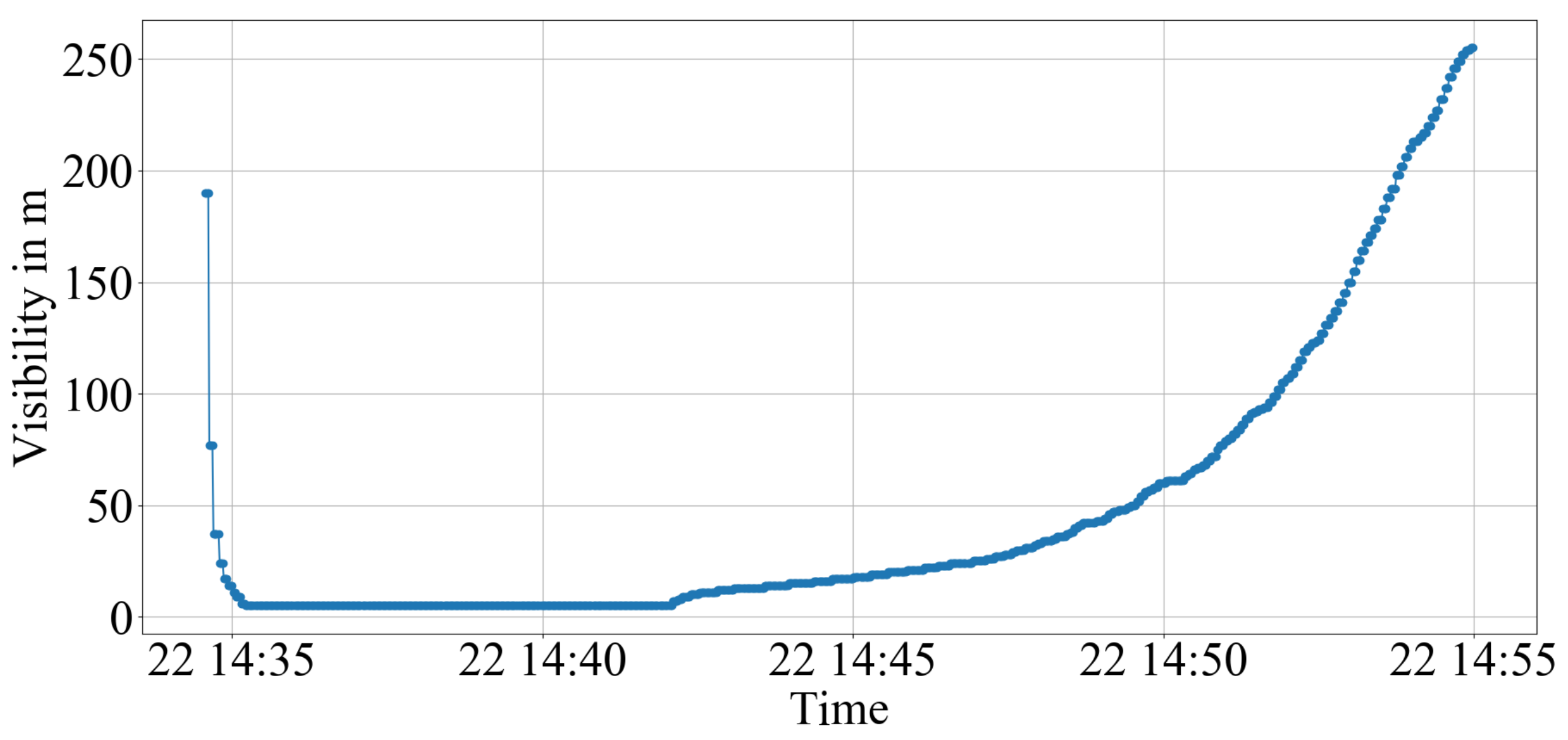

- Transmissiometer

- Passive cameras

4. Methodology

- Clear conditions: recordings done before any weather condition is generated and dry targets.

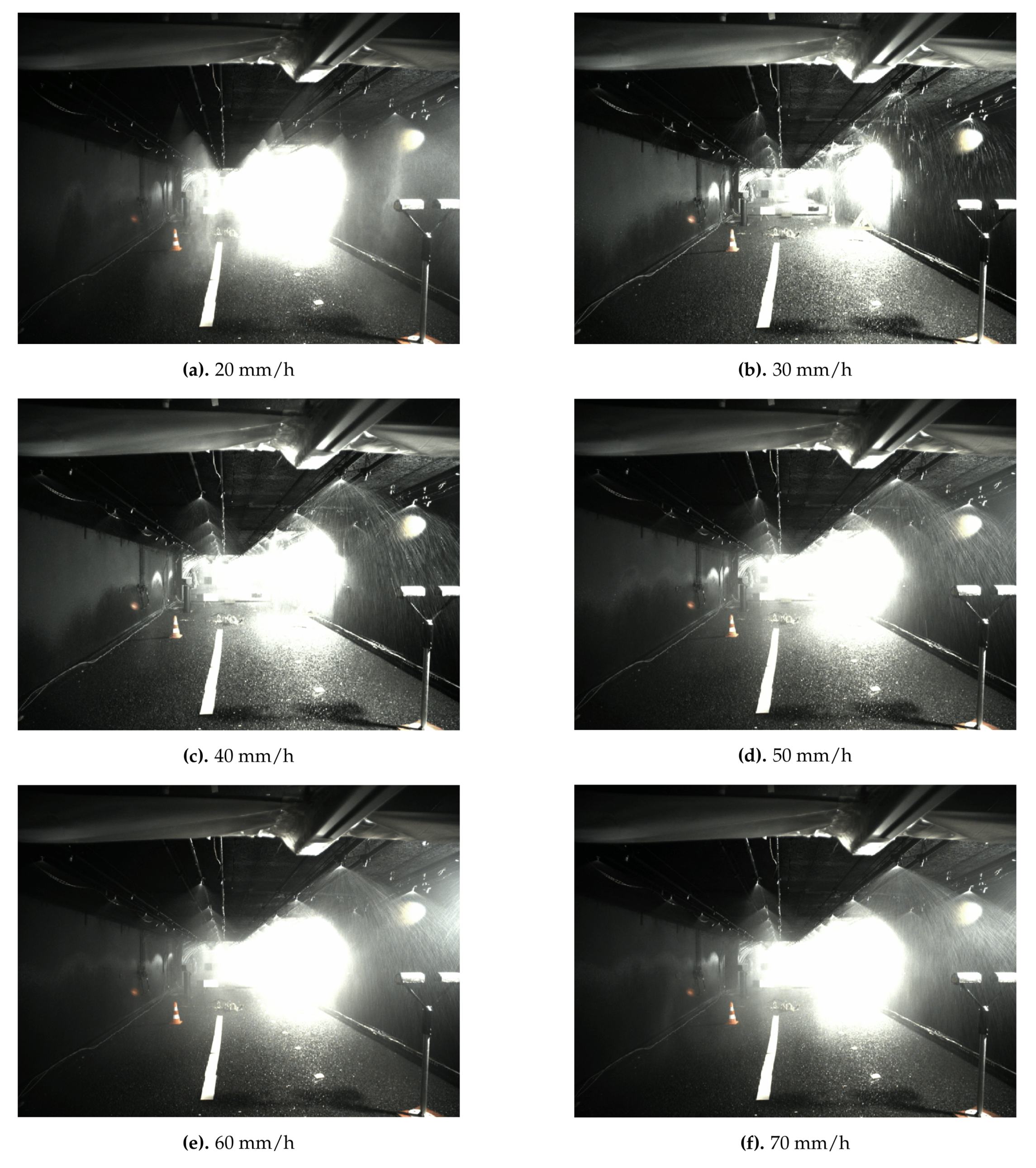

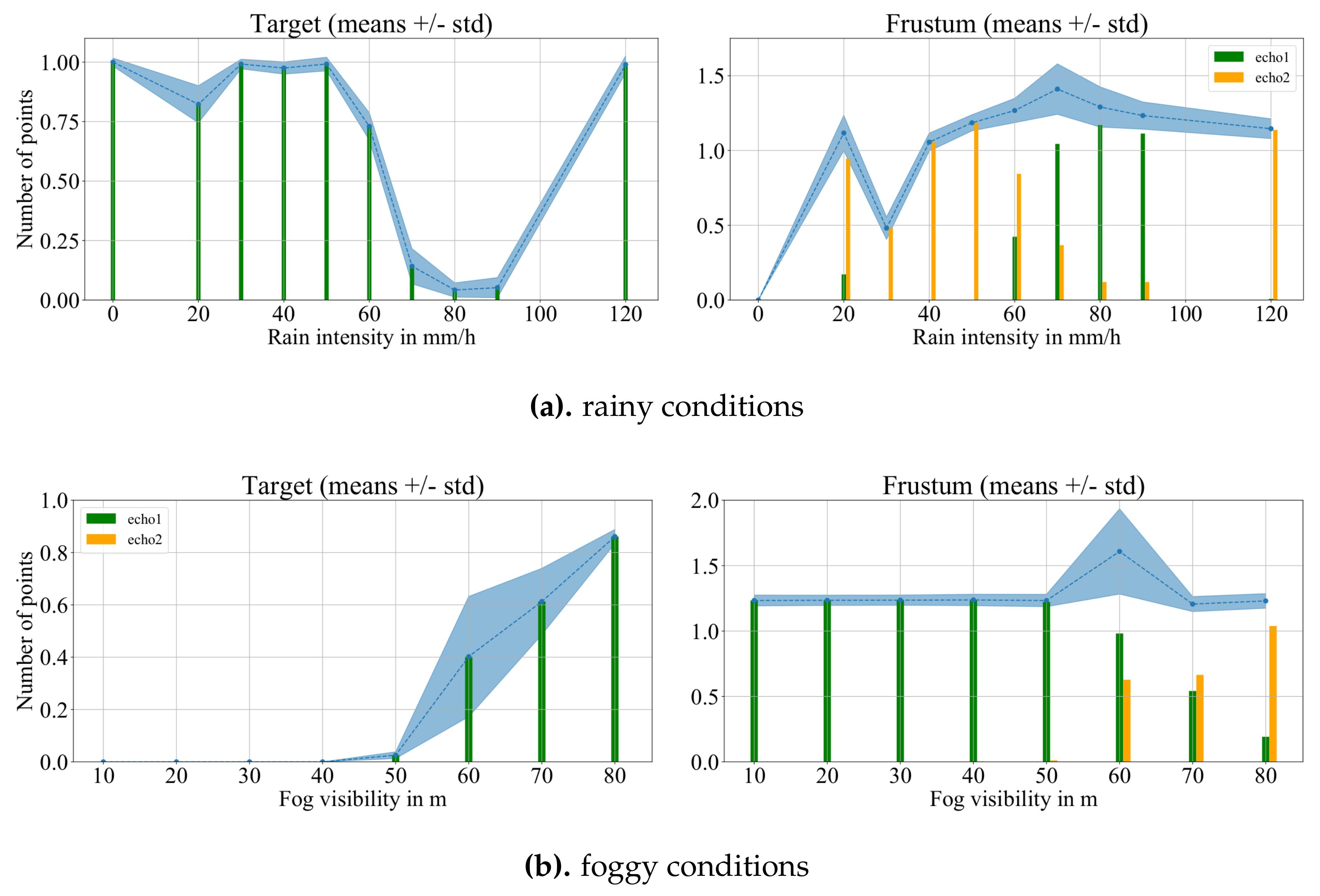

- Rain rate (in mm/h): 20, 30, 40, 50, 60, 70, 80, 90 and 120.

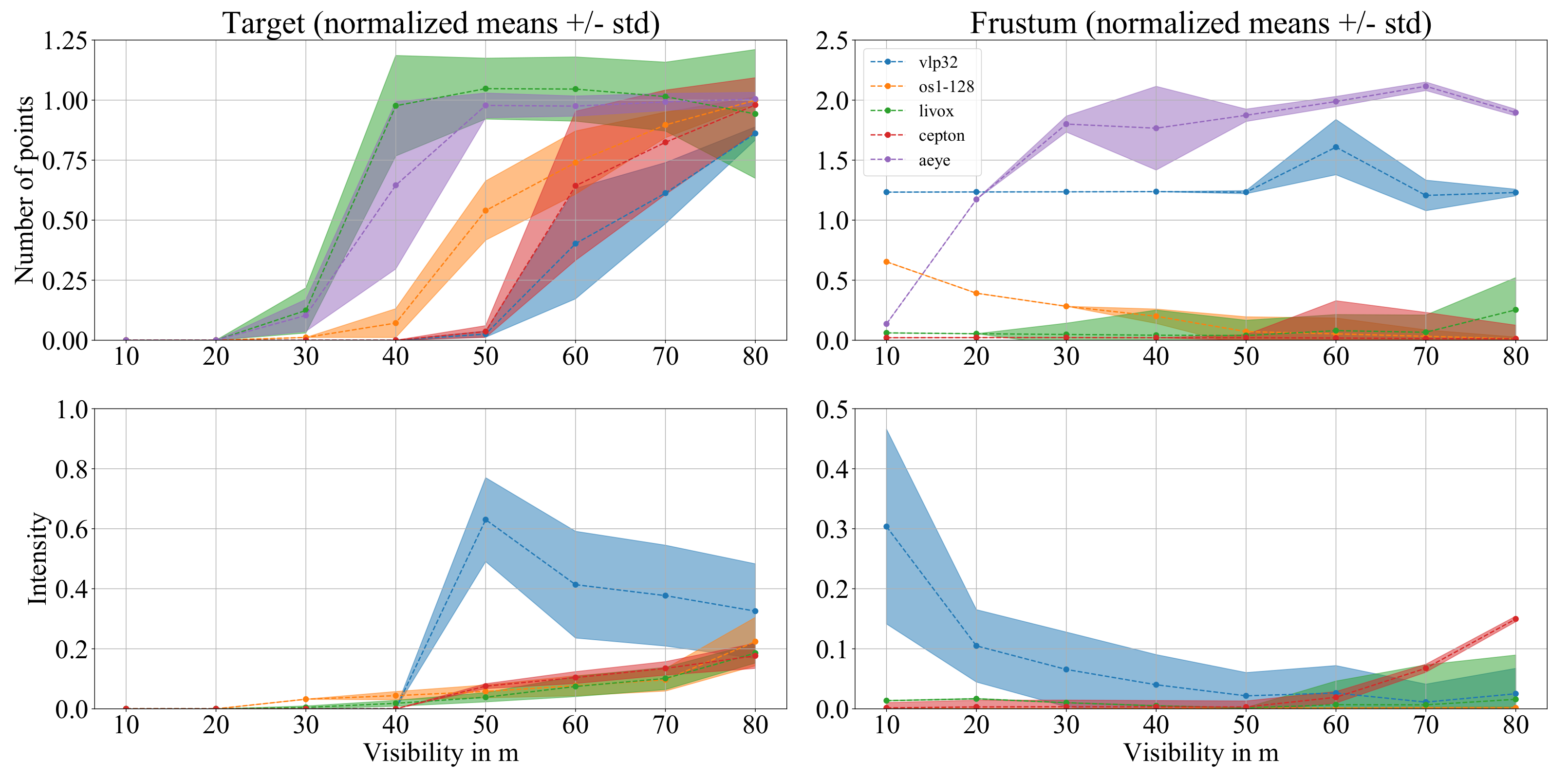

- Fog visibility (in m): 10, 20, 30, 40, 50, 60, 70 and 80.

5. Experimental Results

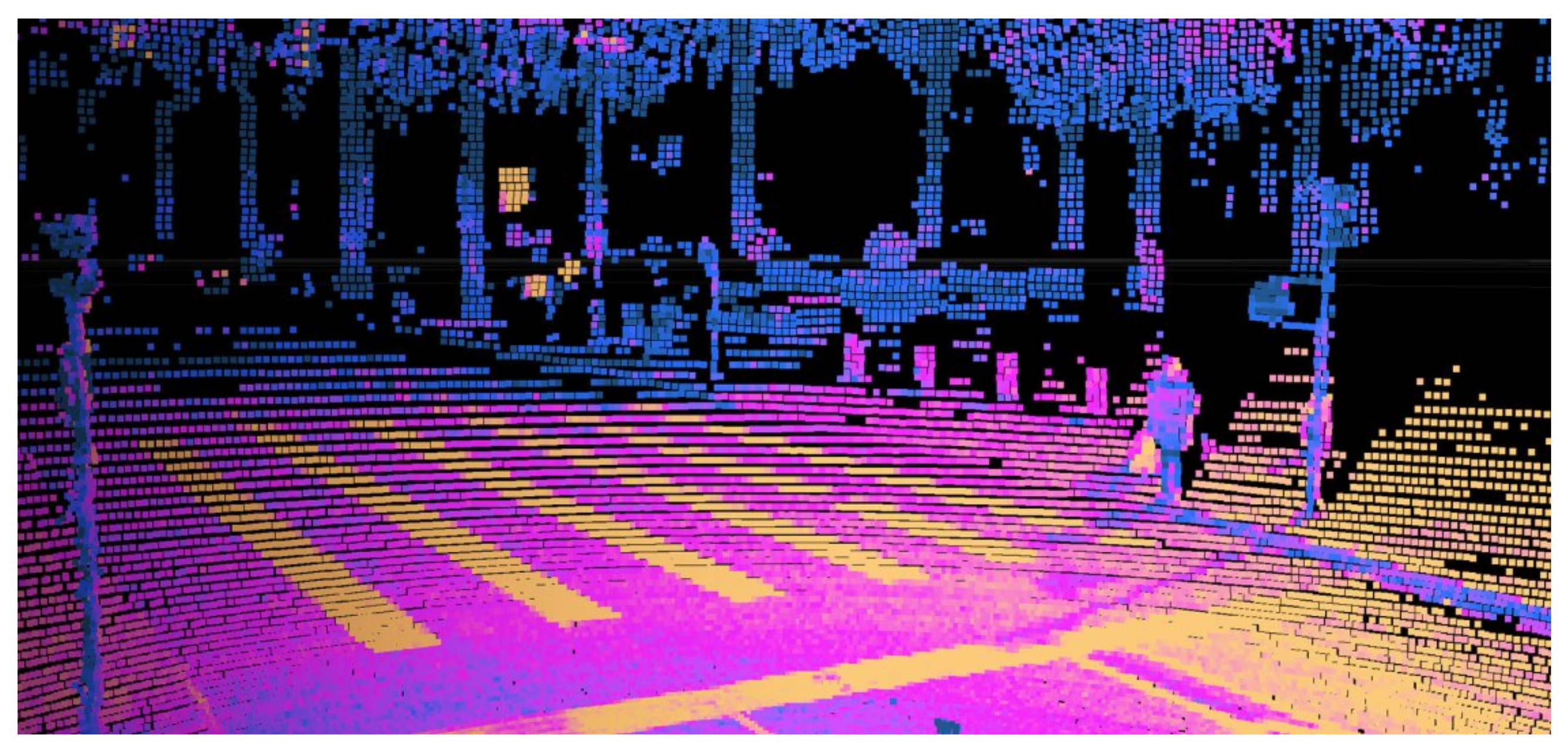

5.1. Clear

5.2. Rainy Weather Conditions

- Visual information

- Speed-diameter histograms

- Target detection

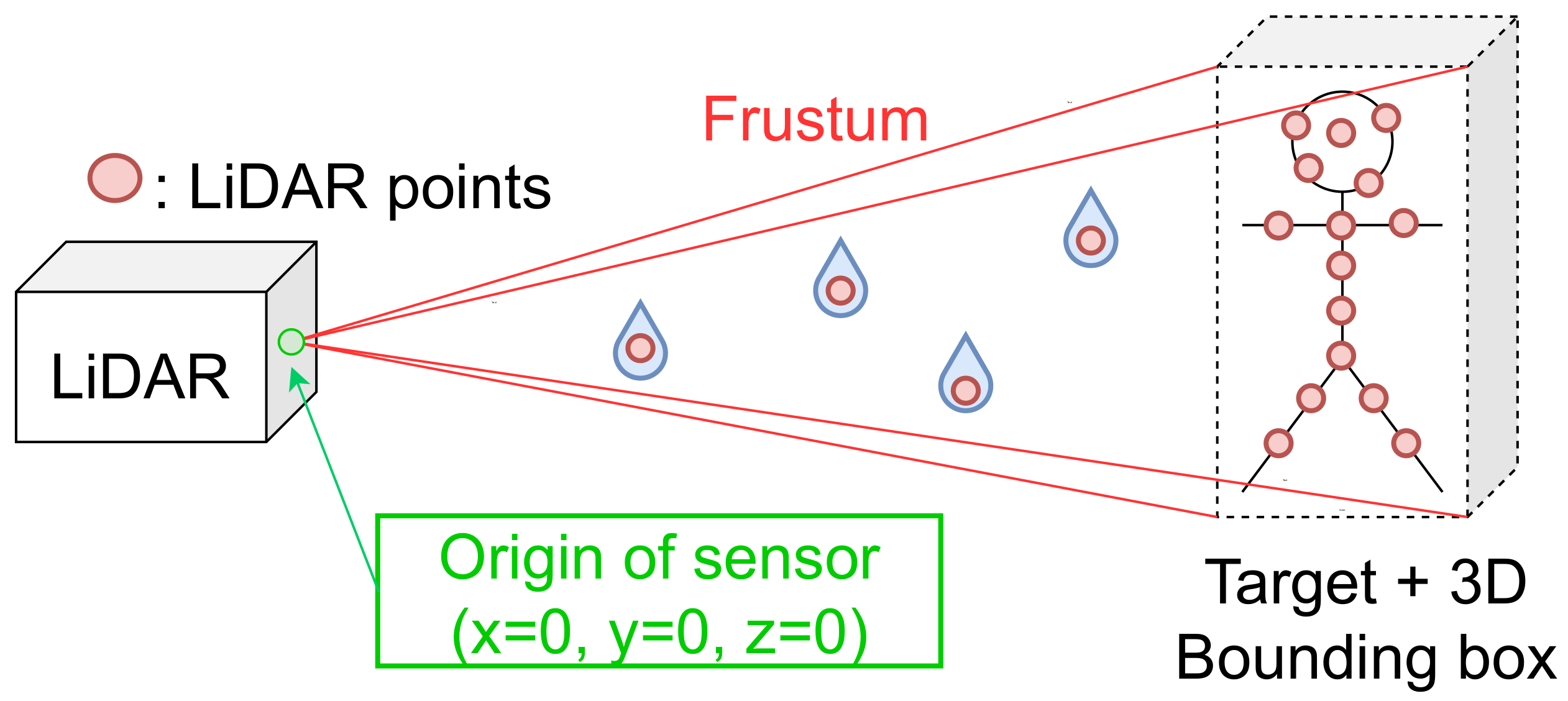

- Sensor to target frustum

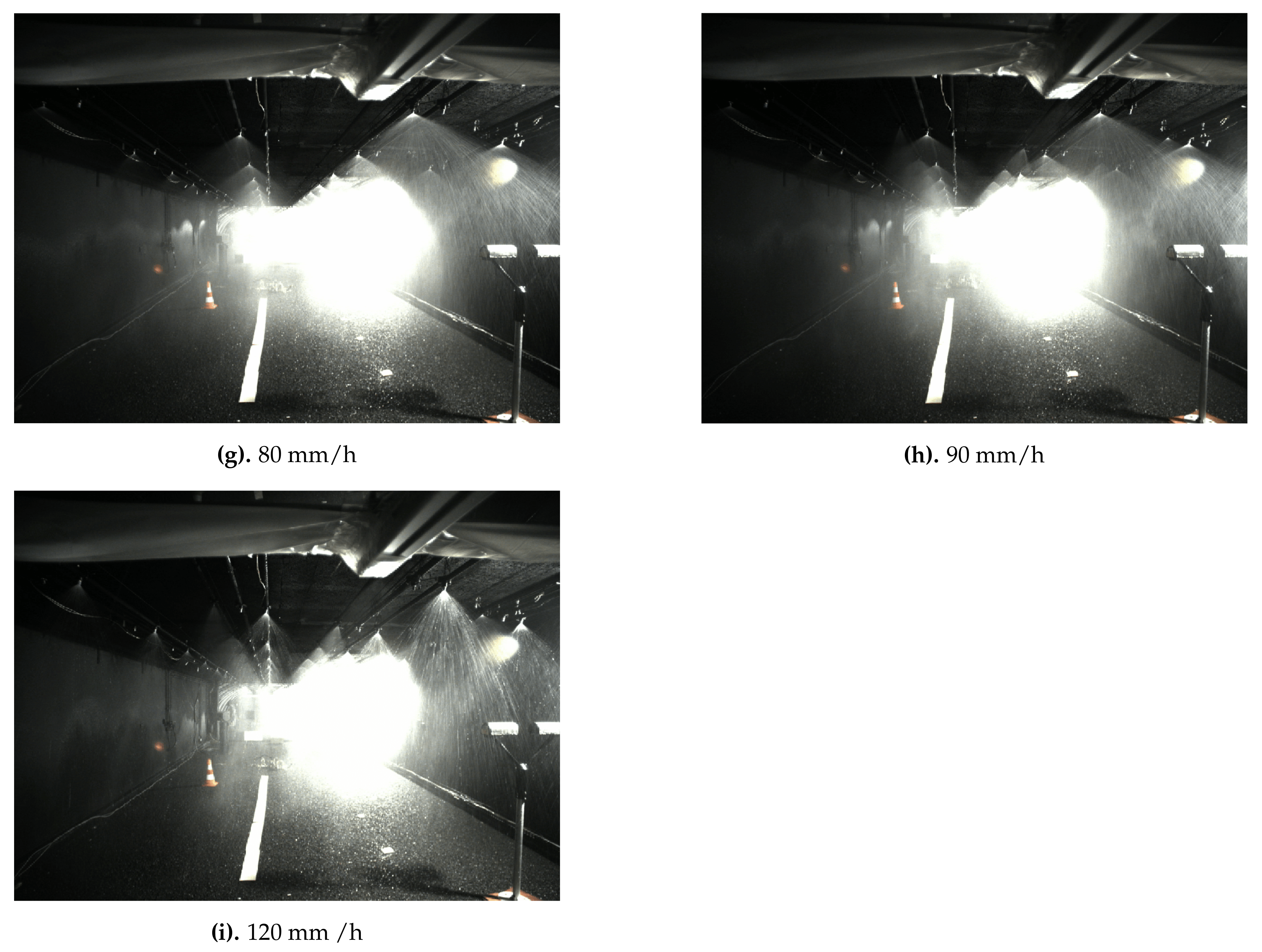

5.3. Foggy Weather Conditions

- Target detection

- Sensor to target frustum

6. Discussion

6.1. Rain

6.2. Fog

6.3. Multi-Echo

7. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Hassen, A.A. Indicators for the Signal Degradation and Optimization of Automotive Radar Sensors under Adverse Weather Conditions. Ph.D. Thesis, Technische Universität Darmstadt, Darmstadt, Germany, 2008. [Google Scholar]

- Bernard, E.; Rivière, N.; Renaudat, M.; Pealat, M.; Zenou, E. Active and Thermal Imaging Performance under Bad Weather Conditions. 2014. Available online: https://oatao.univ-toulouse.fr/11729/ (accessed on 2 June 2021).

- YellowScan. Available online: https://www.yellowscan-lidar.com/knowledge/how-lidar-works/ (accessed on 2 June 2021).

- Ouster. Available online: https://ouster.com/ (accessed on 2 June 2021).

- Sick. Available online: https://www.generationrobots.com/en/401697-sick-lms500-20000-pro-hr-indoor-laser-scanner.html (accessed on 2 June 2021).

- Thrun, S.; Montemerlo, M.; Dahlkamp, H.; Stavens, D.; Aron, A.; Diebel, J.; Fong, P.; Gale, J.; Halpenny, M.; Hoffmann, G.; et al. Stanley: The robot that won the DARPA Grand Challenge. J. Field Robot. 2006, 23, 661–692. [Google Scholar] [CrossRef]

- Radecki, P.; Campbell, M.; Matzen, K. All Weather Perception: Joint Data Association, Tracking, and Classification for Autonomous Ground Vehicles. arXiv 2016, arXiv:1605.02196. [Google Scholar]

- U.S Department of Transportation. Vehicle Automation and Weather Challenges and Opportunities. Technical Report. 2016. Available online: https://rosap.ntl.bts.gov/view/dot/32494 (accessed on 2 June 2021).

- Rasshofer, R.H.; Spies, M.; Spies, H. Influences of weather phenomena on automotive laser radar systems. Adv. Radio Sci. 2011, 9, 49–60. [Google Scholar] [CrossRef]

- Wojtanowski, J.; Zygmunt, M.; Kaszczuk, M.; Mierczyk, Z.; Muzal, M. Comparison of 905 nm and 1550 nm semiconductor laser rangefinders’ performance deterioration due to adverse environmental conditions. Opto-Electron. Rev. 2014, 22. [Google Scholar] [CrossRef]

- Michaud, S.; Lalonde, J.F.; Giguere, P. Towards Characterizing the Behavior of LiDARs in Snowy Conditions. In Proceedings of the 7th Workshop on Planning, Perception and Navigation for Intelligent Vehicles, IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–3 October 2015; p. 6. [Google Scholar]

- Filgueira, A.; González-Jorge, H.; Lagüela, S.; Díaz-Vilariño, L.; Arias, P. Quantifying the influence of rain in LiDAR performance. Measurement 2017, 95, 143–148. [Google Scholar] [CrossRef]

- Kutila, M.; Pyykonen, P.; Holzhuter, H.; Colomb, M.; Duthon, P. Automotive LiDAR performance verification in fog and rain. In Proceedings of the 2018 21st International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 November 2018; pp. 1695–1701. [Google Scholar] [CrossRef]

- Bijelic, M.; Gruber, T.; Ritter, W. A Benchmark for Lidar Sensors in Fog: Is Detection Breaking Down? In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Changshu, China, 26–30 June 2018; pp. 760–767. [Google Scholar] [CrossRef]

- Jokela, M.; Kutila, M.; Pyykönen, P. Testing and Validation of Automotive Point-Cloud Sensors in Adverse Weather Conditions. Appl. Sci. 2019, 9, 2341. [Google Scholar] [CrossRef]

- Heinzler, R.; Schindler, P.; Seekircher, J.; Ritter, W.; Stork, W. Weather Influence and Classification with Automotive Lidar Sensors. In Proceedings of the 2019 IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9–12 June 2019; pp. 1527–1534. [Google Scholar] [CrossRef]

- Li, Y.; Duthon, P.; Colomb, M.; Ibanez-Guzman, J. What happens for a ToF LiDAR in fog? arXiv 2020, arXiv:2003.06660. [Google Scholar] [CrossRef]

- Yang, T.; Li, Y.; Ruichek, Y.; Yan, Z. LaNoising: A Data-driven Approach for 903nm ToF LiDAR Performance Modeling under Fog. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24–30 October 2020; pp. 10084–10091. [Google Scholar] [CrossRef]

- Cerema. Available online: https://www.cerema.fr/fr/innovation-recherche/innovation/offres-technologie/plateforme-simulation-conditions-climatiques-degradees (accessed on 2 June 2021).

- Royo, S.; Ballesta-Garcia, M. An Overview of Lidar Imaging Systems for Autonomous Vehicles. Appl. Sci. 2019, 9, 4093. [Google Scholar] [CrossRef]

- Available online: https://www.techniques-ingenieur.fr/base-documentaire/electronique-photonique-th13/applications-des-lasers-et-instrumentation-laser-42661210/imagerie-laser-3d-a-plan-focal-r6734/ (accessed on 2 June 2021).

- Fersch, T.; Buhmann, A.; Koelpin, A.; Weigel, R. The influence of rain on small aperture LiDAR sensors. In Proceedings of the 2016 German Microwave Conference (GeMiC), Bochum, Germany, 14–16 March 2016; pp. 84–87. [Google Scholar] [CrossRef]

- Available online: https://www.livoxtech.com/horizon (accessed on 2 June 2021).

- Liu, Z.; Zhang, F.; Hong, X. Low-Cost Retina-Like Robotic Lidars Based on Incommensurable Scanning. IEEE/ASME Trans. Mechatron. 2021. [Google Scholar] [CrossRef]

- Church, P.; Matheson, J.; Cao, X.; Roy, G. Evaluation of a steerable 3D laser scanner using a double Risley prism pair. In Degraded Environments: Sensing, Processing, and Display 2017; International Society for Optics and Photonics: Bellingham, WA, USA, 2017; Volume 10197. [Google Scholar] [CrossRef]

- Cao, X.; Church, P.; Matheson, J. Characterization of the OPAL LiDAR under controlled obscurant conditions. In Degraded Visual Environments: Enhanced, Synthetic, and External Vision Solutions 2016; International Society for Optics and Photonic: Bellingham, WA, USA, 2016; Volume 9839. [Google Scholar] [CrossRef]

- Marino, R.M.; Davis, W.R. Jigsaw: A Foliage—Penetrating 3D Imaging Laser Radar System. Linc. Lab. J. 2005, 15, 14. [Google Scholar]

- Velodyne. Available online: https://velodynelidar.com/products/ultra-puck/ (accessed on 2 June 2021).

- Cepton. Available online: https://www.cepton.com/ (accessed on 2 June 2021).

- AEye. Available online: https://www.aeye.ai/products/ (accessed on 2 June 2021).

- Wang, D.; Watkins, C.; Xie, H. MEMS Mirrors for LiDAR: A Review. Micromachines 2020, 11, 456. [Google Scholar] [CrossRef] [PubMed]

- OTT. Available online: https://www.ott.com/products/meteorological-sensors-26/ott-parsivel2-laser-weather-sensor-2392/ (accessed on 2 June 2021).

- Guyot, A.; Pudashine, J.; Protat, A.; Uijlenhoet, R.; Pauwels, V.R.N.; Seed, A.; Walker, J.P. Effect of disdrometer type on rain drop size distribution characterisation: A new dataset for south-eastern Australia. Hydrol. Earth Syst. Sci. 2019, 23, 4737–4761. [Google Scholar] [CrossRef]

- Wang, P.; Pruppacher, H. Acceleration to Terminal Velocity of Cloud and Raindrops. J. Appl. Meteorol. 1977, 16, 275–280. [Google Scholar] [CrossRef]

- FLIR. Available online: https://www.flir.com/products/blackfly-gige/ (accessed on 2 June 2021).

- Carballo, A.; Lambert, J.; Monrroy-Cano, A.; Wong, D.R.; Narksri, P.; Kitsukawa, Y.; Takeuchi, E.; Kato, S.; Takeda, K. LIBRE: The Multiple 3D LiDAR Dataset. arXiv 2020, arXiv:2003.06129. [Google Scholar]

- Park, J.I.; Park, J.; Kim, K.S. Fast and Accurate Desnowing Algorithm for LiDAR Point Clouds. IEEE Access 2020, 8, 160202–160212. [Google Scholar] [CrossRef]

- Shamsudin, A.U.; Ohno, K.; Westfechtel, T.; Takahiro, S.; Okada, Y.; Tadokoro, S. Fog removal using laser beam penetration, laser intensity, and geometrical features for 3D measurements in fog-filled room. Adv. Robot. 2016, 30, 729–743. [Google Scholar] [CrossRef]

- Ouster-Digital-vs-Analog-lidar. Available online: https://ouster.com/resources/webinars/digital-vs-analog-lidar/ (accessed on 2 June 2021).

- Doppler Radar Characteristics of Precipitation at Vertical Incidence. 2006. Available online: https://agupubs.onlinelibrary.wiley.com/doi/abs/10.1029/RG011i001p00001 (accessed on 2 June 2021).

| Sensors | Experimental Conditions | Metrics | Further Analysis |

|---|---|---|---|

| Ref. [11] Hokuyo UTM-30LX-EW SICK LMS200 SICK LMS151 Velodyne HDL-32E | Natural snow | Detected range Detected beam angle Proportion of snowflakes echoes Spatial distribution | Statistical approach Bayesian framework about spatial distribution of echoes |

| Ref. [12] Velodyne VLP-16 | Natural rain Multiple urban surfaces | Range Number of points Intensity | ∅ |

| Ref. [13] Hello-World 1550 nm Velodyne VLP-16 Ibeo Lux | Artificial rain Artificial fog Various reflectivity targets | Signal attenuation Pulse Width Intensity | Confrontation of 905 nm and 1550 wavelengths |

| Ref. [14] Velodyne HDL-64 S2/S3 Ibeo Lux/HD | Artificial fog | Maximum viewing distance Number of points Intensity | Emitted power levels Quality of scanning patterns Multi-echo capabilities |

| Ref. [15] Ibeo Lux Velodyne VLP-16 Ouster OS1-64 Robosense RS-32 Cepton HR80T/W | Artificial fog Natural snow Various reflectivity targets | Range variations Qualitative analysis of pointclouds | ∅ |

| Ref. [16] Velodyne VLP-16 Valeo Scala | Artificial rain Artificial fog | Intensity/Pulse width Number of points Spatial distributions Multiple echoes | Range Weather classification SVM & KNN |

| Ref. [17] Velodyne VLP-32 | Artificial fog | Range Intensity | Gaussian process regression to access minimum visibility of objects, extended to [18] |

| Type | Objects | Reflectivity | Distance (in m) | Label |

|---|---|---|---|---|

| Lambertian Surfaces (Flat squares) | 1 m × 1 m | 80% | 23 | a1 |

| 50 cm × 50 cm | 10% 50% 90% | 11.3 | b1 b2 b3 | |

| 30 cm × 30 cm | 10% 50% 90% | 17.3 | c1 c2 c3 | |

| Road objects | Road sign Boy dummy Woman dummy Road cones Tire Concrete Lane Beacons Tree branch | High unknown unknown High on stripes Low Low High High unknown | 8 12.5 21 6.5 and 10.7 15.5 12.5 0 → 7 0 → 23 8 | r1 r2 r3 r4 r5 r6 r7 r8 r9 |

| Sensor | Type | Maximum Echo Number | Wavelength (in nm) | Points in Single Scan | Intensity (in bit) |

|---|---|---|---|---|---|

| Velodyne VLP-32 | Spinning | 2 | 905 | 35 k | 8 |

| Ouster OS1-128 | Spinning | 1 | 850 | 255 k | 16 |

| Livox Horizon | Risley prisms | 2 | 905 | 25 k | 8 |

| Cepton 860 | Micro motion | 1 | 905 | 30 k | 8 |

| AEye 4SightM | MEMS | 4 | 1550 | 22 k | 16 |

| Sensor | Mean Number of Points | Std | Mean Intensity | Std |

|---|---|---|---|---|

| VLP-32 | 60.55 | 0.93 | 11.07 | 0.50 |

| OS1-128 | 87.05 | 0.29 | 47,482.61 | 1268.17 |

| Livox Horizon | 51.82 | 4.60 | 104.40 | 4.13 |

| Cepton 860 | 109.08 | 3.31 | 1.51 | 0.27 |

| AEye 4SightM | 62.85 | 1.62 | ∅ | ∅ |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Montalban, K.; Reymann, C.; Atchuthan, D.; Dupouy, P.-E.; Riviere, N.; Lacroix, S. A Quantitative Analysis of Point Clouds from Automotive Lidars Exposed to Artificial Rain and Fog. Atmosphere 2021, 12, 738. https://doi.org/10.3390/atmos12060738

Montalban K, Reymann C, Atchuthan D, Dupouy P-E, Riviere N, Lacroix S. A Quantitative Analysis of Point Clouds from Automotive Lidars Exposed to Artificial Rain and Fog. Atmosphere. 2021; 12(6):738. https://doi.org/10.3390/atmos12060738

Chicago/Turabian StyleMontalban, Karl, Christophe Reymann, Dinesh Atchuthan, Paul-Edouard Dupouy, Nicolas Riviere, and Simon Lacroix. 2021. "A Quantitative Analysis of Point Clouds from Automotive Lidars Exposed to Artificial Rain and Fog" Atmosphere 12, no. 6: 738. https://doi.org/10.3390/atmos12060738

APA StyleMontalban, K., Reymann, C., Atchuthan, D., Dupouy, P.-E., Riviere, N., & Lacroix, S. (2021). A Quantitative Analysis of Point Clouds from Automotive Lidars Exposed to Artificial Rain and Fog. Atmosphere, 12(6), 738. https://doi.org/10.3390/atmos12060738